I urge everyone to check out this genuinely frightening piece over at the “io9 Blog” by George Dvorsky – one of the most knowledgeable guys around when it comes to studying/explaining the benefits and risks associated with emerging forms of artificial intelligence.

Clearly, this particular article is concerning enough in its own right: namely, the revelation by computer virus expert, Eugene Kaspersky, that the Stuxnet worm – believed to have been created as a joint US-Israeli effort to slow Iran’s development of nuclear weapons – has recently infected both a Russian nuclear power plant AND the International Space Station. It’s the underlying implications, however, that really matter.

Although it remains unclear what damage – if any – has actually been caused as a result of these specific Stuxnet incidents, Dvorsky makes three excellent points as to why we ought be taking this type of occurrence extremely seriously: (1) Neither the space station nor the nuclear plant are connected to the public Internet, meaning that these incidents were probably caused by the infection having spread directly into hardware; (2) Stuxnet contains something known as a “programmable logic controller rootkit for the automation of electromechanical processes” – in other words although it exists as non-physical code, it’s fully capable of controlling and destroying physical machines; and (3) We don’t yet know whether Stuxnet or similar entities (much like biological viruses) might experience unforeseen “mutations” caused by environmental factors – e.g., their code might begin to function extremely differently once inside a Russian-designed software platform rather than within an Iranian-coded system.

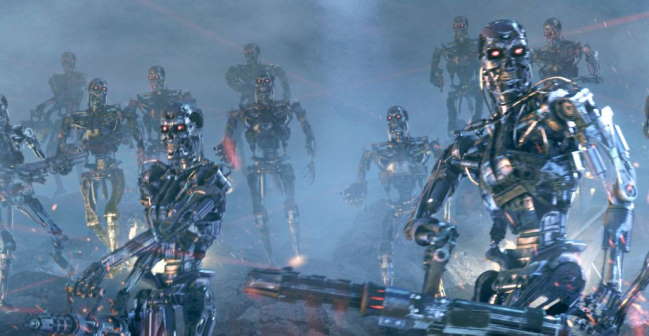

What’s the upshot? Essentially that something ultra-advanced – say, a Terminator-esque “Skynet” – IS NOT REMOTELY REQUIRED in order to cause massive havoc in the physical human world. Even if Stuxnet itself doesn’t turn out to be a cause of major problems, given the incredibly rapid development of cyber-weaponry around the globe, it’s only a matter of time before one of these things adopts its own agenda – or vastly expands whatever mission it was originally designed to accomplish. Put slightly differently, what’s truly scary is not an emergence of self-awareness or so-called “strong artificial intelligence” (at least not yet), but rather the actual contemporary existence of a “stupid AI” device that begins acting in ways its programmers neither predicted nor are able to stop.

Dvorsky offers a much deeper dive into this fascinating and – yes – somewhat terrifying subject in a longer article available here. READ IT. Seeing as virtually everything we depend upon (planes that fly, powerful weapons hitting the right targets, GPS navigation, operational power grids, water supply systems, electronic medical records, financial markets and the functioning of almost every imaginable governmental service…) could be severely impacted by a “rogue event” like the ones identified by Kaspersky, this is an imminent issue. It deserves careful attention NOW – from all of us.

One final and personal aside — As a wealth manager, my primary fiduciary responsibility is the protection and thoughtful stewardship of my clients’ assets. Now that enormous trading volume is being handled by high frequency computer systems, should something go wrong (and, of course, we’ve already seen this happen on a relatively small scale), it could literally become impossible for me to fulfill my client obligations because, while I always strive to be a true professional, I (usually) can’t react in nano-seconds. Even high frequency traders themselves acknowledge the very real possibility of losing total control over their systems due to error, negligence or cyber-crime. Hence, there is NOTHING abstract or “science fiction-y” when it comes to the issues Dvorsky is ultimately addressing – certainly not for me.